Visual Intelligence is one of the few AI-powered feature of iOS 18 that we regularly make use of. Just hold down the Camera button on your iPhone 16 (or trigger it with Control Center on an iPhone 15 Pro), point your phone at something, and hit the button.

If it’s a sign in a foreign language, you can translate it. If there’s a phone number, call it in one tap. Address? Add it to your contacts or navigate to it. A business may pull up hours and contact info, or even a menu if it’s a restaurant. And of course, it can identify all sorts of plants and animals, landmarks, famous artwork, and more. If Apple’s built-in AI doesn’t know enough, you can tap the Ask button to ask ChatGPT.

Once you remember it’s there, you’ll find yourself using it all the time. It’s genuinely useful, but it has one serious limitation—in iOS 18, it’s limited to what your phone’s camera can see.

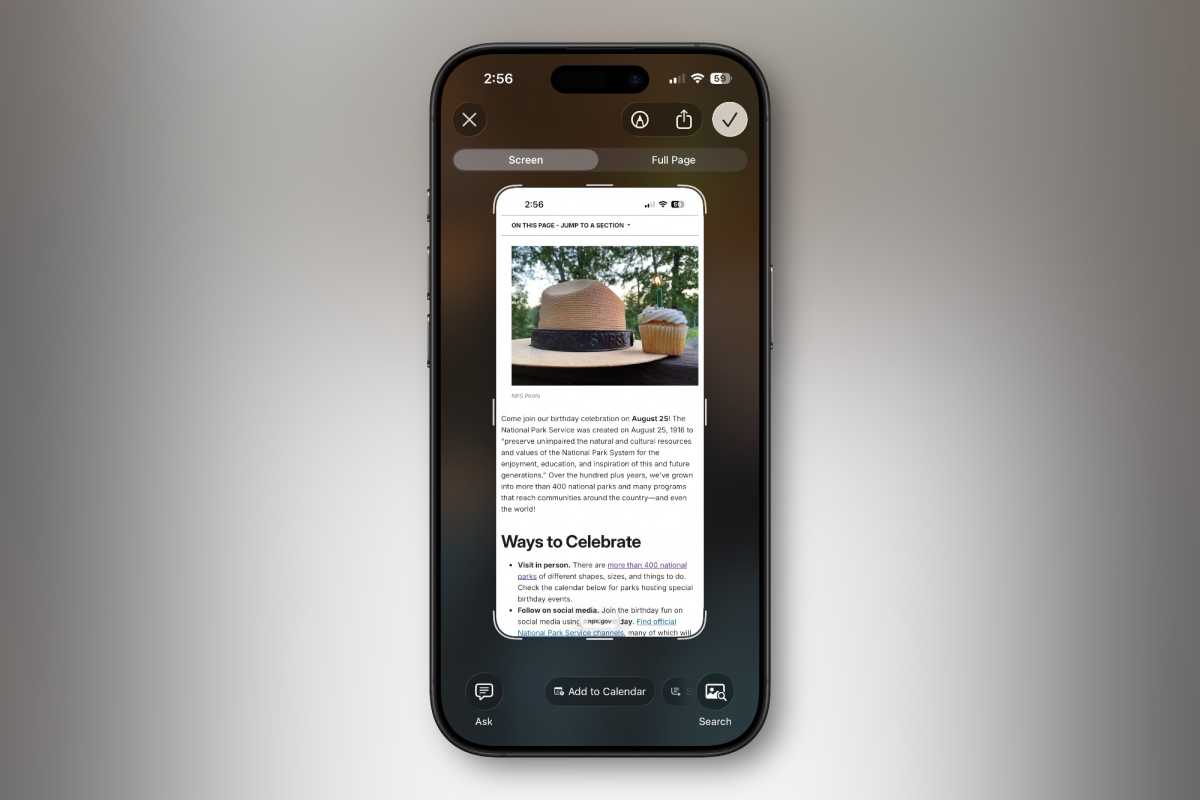

With iOS 26, Apple fixes that, by building Visual Intelligence into the screenshot interface. Now you can use the same AI-powered features on any screenshot, from any app. It can be a web page, a video, a video game; if you can take a screenshot, you can use Visual Intelligence on it.

Start with a screenshot

Visual Intelligence always requires an iPhone 15 Pro or iPhone 16 (or later), as it is a core Apple Intelligence feature.

To take a screenshot in iOS 26, just do what you’ve always done: press the Volume Up and Side buttons at the same time. You’ll notice the screenshot preview is large now, instead of a little thumbnail in the corner. (You can change this in Settings > General > Screen Capture.)

You may be in markup mode by default—if so, tap the little pen icon at the top of the screen to see Visual Intelligence options instead.

Visual Intelligence on screenshots

Using Visual Intelligence on screenshots works very much like it does with the camera.

To the left, you’ll see an “ask” button that will ask ChatGPT about the content of the the screenshot. To the right, an image search button that performs a reverse image search with Google. That’s a great way to look up some item you want to buy or clothing you see someone wearing.

Foundry

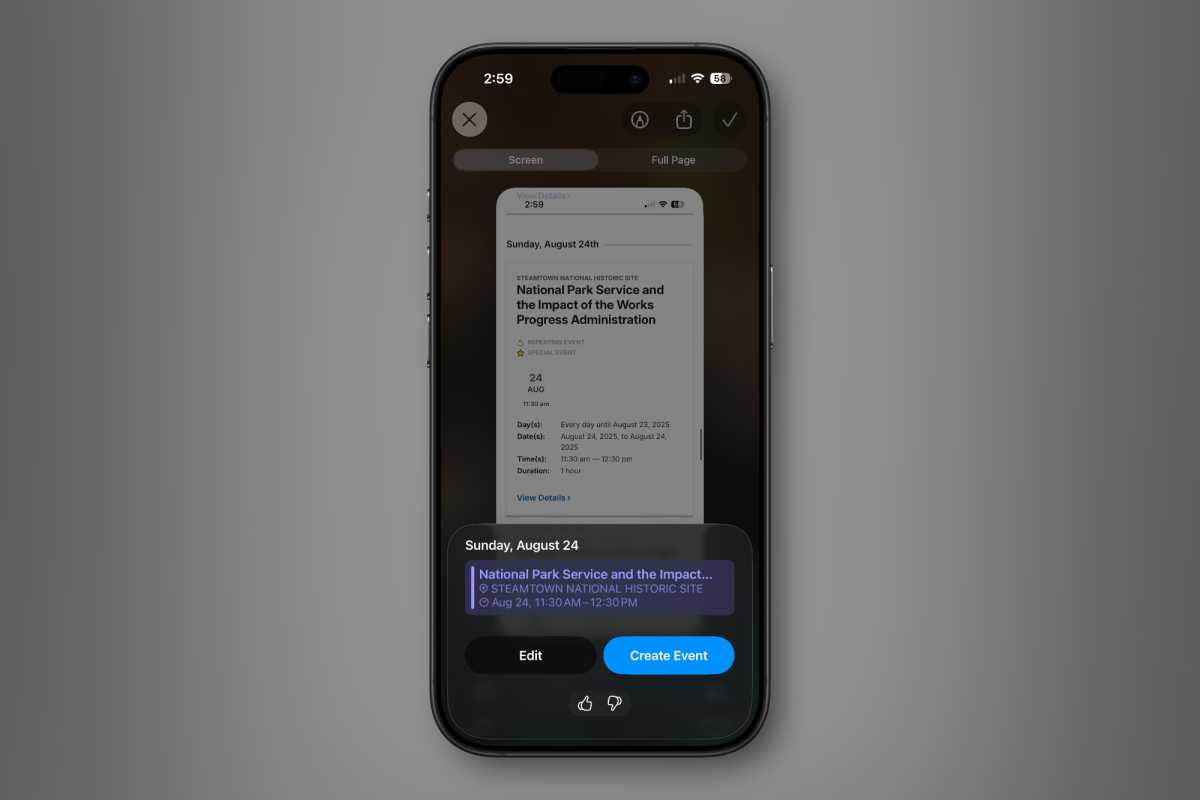

In the center, you’ll see some contextual options based on the content of the screenshot. If there are phone numbers, you’ll see options to call them with one tap. If there’s an address, you can look it up on Maps. Long blocks of text will have the option to summarize them with AI or read them aloud. Obvious plants, animals, or landmarks may be automatically recognized and show up here—a quick tap brings up info about it.

Foundry

Of course, you may not want to search the entire screenshot. To focus on just one element, simply circle it with your finger. Then, tapping the Ask button will ask ChatGPT about only that object. The center will show contextual options only for the circled area. And of course, the google image search will only focus on the part of the image you highlighted.

Foundry

And that’s really all there is to it. With iOS 26, any iPhone 15 Pro or later just automatically enables these Visual Intelligence features on screenshots. Just make sure you’re not in markup mode (tap the pen icon) and have Apple Intelligence enabled in Settings.

Average Rating